Explore the fundamentals of causal inference and discovery using Python. Learn how to implement causal inference with libraries like DoWhy and CausalInference. Discover causal structures and analyze data to uncover causal relationships, enabling informed decision-making in data science and real-world applications.

Master the tools and techniques to model causal assumptions and estimate treatment effects. This introduction provides a solid foundation for understanding and applying causal inference in Python effectively.

What is Causal Inference?

Causal inference is the process of determining whether a specific intervention or factor causes a particular outcome. It involves analyzing data to establish cause-effect relationships, going beyond mere correlation. Unlike traditional machine learning, which focuses on prediction, causal inference aims to identify causal mechanisms. Key concepts include potential outcomes, causal graphs, and addressing biases like confounding variables. Tools like Python’s DoWhy library simplify this process by modeling causal assumptions and testing interventions. Causal inference is essential for answering “what if” questions, enabling informed decision-making in fields such as healthcare, business, and policy.

Importance of Causal Inference in Data Science

Causal inference is crucial in data science as it enables researchers to move beyond mere correlation and uncover true cause-effect relationships. By identifying causal mechanisms, data scientists can make informed decisions, predict outcomes of interventions, and evaluate the effectiveness of policies or treatments. Unlike traditional machine learning, which focuses on prediction, causal inference provides actionable insights, helping organizations optimize strategies and allocate resources effectively. It is particularly valuable in fields like healthcare, business analytics, and policy-making, where understanding causality can lead to significant improvements in outcomes. Addressing challenges like confounders and selection bias ensures more reliable analyses, making causal inference indispensable for data-driven decision-making.

Overview of Causal Discovery

Causal discovery is the process of identifying causal relationships from observational data. Unlike causal inference, which often relies on structured experiments or interventions, causal discovery aims to uncover hidden causal structures in data. It combines statistical methods and domain knowledge to infer directed causal relationships between variables. Techniques such as Bayesian networks, structural equation modeling, and constraint-based algorithms (e.g., PC algorithm) are commonly used. These methods help in constructing causal graphs, which visually represent relationships and potential confounders. Causal discovery is particularly useful when prior knowledge of causal mechanisms is limited, enabling data-driven insights into underlying processes. It bridges the gap between correlation and causation, providing a foundation for further causal analysis.

Challenges in Causal Inference

Causal inference faces challenges like confounding, selection bias, reverse causality, and measurement error. Complex relationships, missing data, and model dependence further complicate accurate causal analysis and interpretation.

Confounders and Their Impact

Confounders are variables that influence both the treatment and the outcome, creating a spurious association. If left unaddressed, confounders bias the causal effect estimate, making it difficult to isolate the true impact of the treatment. For example, in observational studies, factors like age or socioeconomic status might confound the relationship between variables. Ignoring confounders leads to invalid conclusions, as the observed effect may not be due to the treatment but rather the confounding variable. Addressing confounders is critical and often achieved through methods like matching, stratification, or adjusting for them in models. Proper handling of confounders ensures more reliable causal inferences and valid conclusions.

Selection Bias in Observational Data

Selection bias arises when the sample data is not representative of the population due to non-random selection. In observational studies, this occurs when the treatment and control groups differ systematically, often due to unobserved factors. Unlike randomized controlled trials, where subjects are randomly assigned, observational data is prone to self-selection or non-random assignment. This bias can lead to incorrect causal conclusions, as the observed effects may be influenced by differences between groups rather than the treatment itself. Addressing selection bias requires careful data collection, matching techniques, or statistical adjustments to ensure comparability between groups. Ignoring it can result in misleading inferences about causal relationships.

Challenges in Real-World Applications

Applying causal inference in real-world scenarios presents several challenges. One major issue is the complexity of data, often characterized by high dimensionality, missing values, and temporal dependencies. Identifying confounders and ensuring their measurement is non-trivial, especially in observational settings. Additionally, real-world data may exhibit non-linear relationships and interactions, complicating causal modeling. Interventions or treatments may vary over time, introducing dynamic effects that require specialized methods. Practical constraints, such as limited sample sizes or incomplete data, further hinder accurate causal analysis. Addressing these challenges demands robust methodologies, domain expertise, and careful validation to ensure reliable insights for decision-making.

Key Concepts in Causal Inference

Key concepts in causal inference include potential outcomes, causal graphs, confounders, and the do-operator, which form the foundation of causal reasoning and analysis.

Potential Outcomes Framework

The Potential Outcomes Framework is a foundational concept in causal inference, introduced by Rubin, which conceptualizes causal effects by considering outcomes under different treatments. For each unit, there exists a potential outcome for each possible treatment. However, only one treatment is observed, leading to counterfactual outcomes that are unobserved. This framework is crucial for understanding causal relationships, as it provides a structure to estimate treatment effects even when only one outcome is observed. In practice, it underpins methodologies like Randomized Controlled Trials (RCTs) and observational studies, where assumptions like the Stable Unit Treatment Value Assumption (SUTVA) are essential for valid causal inferences. Python libraries such as DoWhy and CausalInference implement these concepts, aiding in the estimation of causal effects based on potential outcomes. This framework is widely applied in various fields, including business analytics, public policy, and healthcare, to evaluate the impact of interventions accurately.

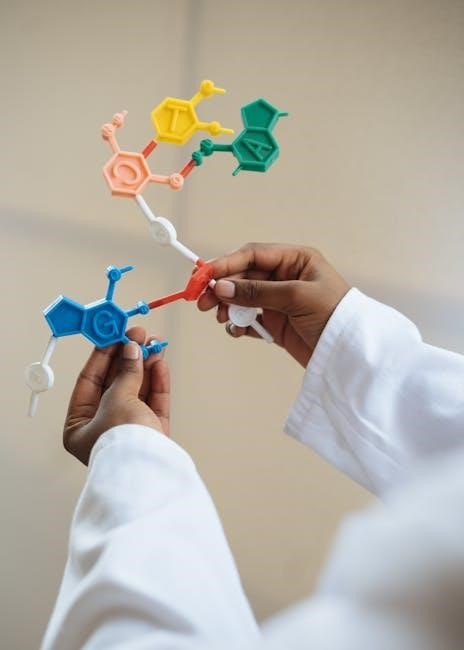

Causal Graphs and Directed Acyclic Graphs (DAGs)

Causal graphs and Directed Acyclic Graphs (DAGs) are essential tools for visualizing and modeling causal relationships in data. A causal graph represents variables and the causal links between them, while a DAG ensures no cycles, preventing feedback loops. These structures are fundamental for identifying confounders, mediators, and direct/indirect causal pathways. In Python, libraries like `dowhy` and `causalgraph` enable the creation and analysis of DAGs. Causal graphs guide the identification of variables to adjust for, ensuring unbiased estimates of causal effects. They are widely used in fields like business analytics, healthcare, and social sciences to map complex causal relationships and validate assumptions for robust causal inferences. Properly constructed DAGs are critical for applying methods like do-calculus and interventions effectively. They simplify the interpretation of causal mechanisms in data, making them indispensable in modern causal inference workflows.

Identifying Confounders and Causal Pathways

Identifying confounders and causal pathways is crucial for unbiased causal inference. Confounders are variables that influence both the treatment and the outcome, leading to spurious associations. Techniques like correlation analysis, mutual information, and structural equation modeling help detect confounders. Causal pathways, such as direct or indirect effects, can be inferred using methods like mediation analysis. Python libraries like `dowhy` and `causalgraph` simplify the process by automating confounder identification and visualizing causal structures. Proper identification ensures accurate estimation of treatment effects by adjusting for confounding variables. This step is vital for valid causal inferences and reliable policy interventions. Advanced algorithms and visual tools in Python streamline the process, enabling precise modeling of causal relationships.

Do-Operator and Interventional Distribution

The Do-Operator, introduced by Pearl, represents interventions in a system, allowing researchers to model causal effects by setting variables to specific values. It is a fundamental concept in causal inference, enabling the estimation of potential outcomes under hypothetical interventions. The interventional distribution describes the probability distribution of variables after an intervention, providing insights into causal relationships. In Python, libraries like `dowhy` and `bayesian-networks` facilitate the implementation of the Do-Operator and interventional distributions. These tools help researchers simulate interventions and understand the causal effects of variables. The Do-Operator is essential for testing causal hypotheses and predicting outcomes of policy interventions, making it a cornerstone of modern causal inference. Accurate modeling of interventional distributions ensures reliable causal conclusions.

Assumptions in Causal Inference

Causal inference relies on key assumptions like unconfoundedness, positivity, and SUTVA to establish valid causal relationships and ensure unbiased treatment effect estimates from observational data.

Stable Unit Treatment Value Assumption (SUTVA)

The Stable Unit Treatment Value Assumption (SUTVA) posits that the treatment effect on one unit does not influence the outcomes of other units, ensuring independent responses to interventions. This assumption is vital in causal inference as it prevents interference between units, allowing valid comparisons between treated and control groups. If SUTVA is violated, treatment effect estimates may be biased, as one unit’s treatment could affect another’s outcome. For example, in a medical study, if patients share resources, their outcomes might be interdependent, violating SUTVA. In Python-based causal analysis, libraries like DoWhy and CausalInference provide tools to address SUTVA, helping researchers maintain valid causal estimates.

Positivity Assumption

The Positivity Assumption in causal inference requires that no unit in the study has a probability of zero or one of receiving the treatment. This ensures overlap between treated and control groups, enabling valid comparisons. If some units never receive treatment (probability=0) or always receive it (probability=1), it leads to incomplete data and biased estimates. This assumption guarantees that treatment assignment is not deterministic and allows for the identification of causal effects. In Python, libraries like DoWhy and CausalInference provide tools to check and handle violations of this assumption. Violations can lead to unreliable estimates, but methods like restricting samples or using weighting techniques can address them, ensuring robust causal analyses.

Unconfoundedness Assumption

The Unconfoundedness Assumption states that all confounding variables influencing both treatment assignment and the outcome are measured and accounted for in the analysis. This ensures that any observed differences between treated and control groups are solely due to the treatment, not unmeasured factors. In causal inference, this assumption is critical for identifying true causal effects. Violations occur when unmeasured confounders exist, leading to biased estimates. Techniques like matching, stratification, or instrumental variables can help address such issues. In Python, libraries such as DoWhy and CausalInference provide methods to incorporate measured covariates, helping researchers adhere to this assumption and draw valid causal conclusions. Proper handling of this assumption is essential for reliable causal analysis.

Methodologies for Causal Inference

Methodologies for causal inference include experimental and observational approaches, implemented in Python to establish cause-effect relationships, crucial for data-driven decision-making.

Randomized Controlled Trials (RCTs)

Randomized Controlled Trials (RCTs) are the gold standard for establishing causality. By randomly assigning subjects to treatment or control groups, RCTs minimize bias and confounding variables. This design ensures that any observed differences between groups can be attributed to the intervention. In Python, implementing RCT analyses involves simulating random assignments, calculating treatment effects, and performing hypothesis tests. Libraries like DoWhy and CausalInference provide tools for executing and analyzing RCTs effectively. RCTs are particularly valuable in scenarios where causal relationships are unclear, offering robust evidence for decision-making in fields such as healthcare, social sciences, and business analytics.

Matching Methods for Observational Data

Matching methods are essential in observational studies to reduce bias by making treatment and control groups more comparable. Common techniques include propensity score matching, nearest neighbor matching, and exact matching. Propensity scores estimate the likelihood of receiving treatment based on observed characteristics. These methods help balance groups, mimicking randomization in RCTs. In Python, libraries like DoWhy and CausalInference offer tools for implementing matching. However, challenges remain, such as unobserved confounders and ensuring all variables are accurately matched. Evaluation involves checking balance metrics to confirm comparability. Despite limitations, matching methods are crucial for causal inference in real-world applications, like evaluating marketing campaigns, where RCTs are infeasible.

Instrumental Variables Approach

The instrumental variables (IV) approach is a statistical method used to estimate causal effects in the presence of confounding. It relies on identifying an instrument—a variable that influences the treatment but does not directly affect the outcome except through the treatment. This method helps address unobserved confounding by creating a scenario similar to randomization. Key assumptions include the instrument being relevant, excludable, and unconfounded. In Python, libraries like statsmodels and linearmodels provide tools for IV estimation. For example, using statsmodels, you can implement two-stage least squares (2SLS) regression. This approach is widely used in economics and social sciences for robust causal inference.

Difference-in-Differences Method

The Difference-in-Differences (DiD) method is a widely used approach in causal inference to estimate the effect of a treatment or intervention. It compares the change in outcomes over time between a treated group and a control group, assuming that both groups would have exhibited similar trends in the absence of treatment. This method is particularly useful for observational data where randomization is not feasible. Key assumptions include the parallel trends assumption, which requires that the treated and control groups have similar outcome trends pre-treatment. In Python, libraries like pandas and statsmodels can be used to implement DiD analysis. The method is robust for estimating causal effects when confounding is present but is limited by its reliance on the parallel trends assumption and potential biases from unobserved time-varying confounders.

Implementation in Python

In Python, causal inference can be implemented using libraries like DoWhy and CausalInference. These libraries provide tools for estimating causal effects from observational data. DoWhy supports causal analysis through the potential outcomes framework and causal graphs, while CausalInference offers methods like propensity score matching. Additionally, statsmodels includes tools for regression-based causal modeling. By leveraging these libraries, data scientists can perform robust causal analysis and infer causal relationships effectively in Python.

The DoWhy library is a popular Python package designed specifically for causal inference. It provides an intuitive interface for causal analysis, allowing users to estimate causal effects easily. DoWhy is built on the potential outcomes framework and supports various methods, including propensity score matching and instrumental variables. One of its key features is the ability to automatically identify confounders and test causal assumptions. The library also integrates seamlessly with popular data science tools like Pandas and Scikit-learn, making it versatile for real-world applications. By simplifying complex causal analysis workflows, DoWhy has become a go-to tool for data scientists exploring causal relationships in Python.

Using CausalInference Library in Python

The CausalInference library in Python is a powerful tool for estimating causal effects in observational and experimental data. It provides methods like matching, weighting, and subclassification to adjust for confounders. The library supports propensity score-based techniques and allows for robust estimation of treatment effects. CausalInference is particularly useful for observational studies where randomization cannot be assumed. It offers flexibility in handling different data types and is compatible with Pandas for seamless integration into data workflows. By simplifying causal effect estimation, CausalInference enables data scientists to draw meaningful insights from complex datasets, making it a valuable asset for applied causal inference tasks.

Implementing Causal Inference with Python Code

Implementing causal inference in Python involves several steps to estimate causal effects accurately. First, data preprocessing ensures variables are properly formatted. Next, libraries like DoWhy or CausalInference are used to estimate treatment effects. For example, the do operator in DoWhy allows simulating interventions. Researchers can also perform causal inference using matching methods or instrumental variables. Code often includes functions to check assumptions like unconfoundedness and positivity. Visualizing results with libraries like Matplotlib or Seaborn helps communicate findings. Best practices include validating results with domain knowledge and ensuring code is modular and well-documented. This structured approach enables reliable causal analysis in Python, making it accessible for data scientists.

Causal Discovery in Python

Causal discovery in Python identifies causal relationships from observational data using algorithms like PC or GES, enabling insights into underlying mechanisms and supporting decision-making processes effectively.

What is Causal Discovery?

Causal discovery is the process of identifying causal relationships from observational data. It aims to uncover underlying mechanisms and dependencies, distinguishing causation from correlation. Unlike traditional statistical methods, causal discovery focuses on directed relationships, helping to determine how variables influence one another. This approach is particularly valuable in scenarios where randomized controlled trials are impractical. By leveraging algorithms such as Bayesian networks or structural equation modeling, causal discovery provides insights into causal structures, enabling better decision-making and policy interventions. It is a cornerstone of causal inference, bridging the gap between data analysis and actionable causal insights.

Algorithms for Causal Discovery

Several algorithms are used for causal discovery, including the PC algorithm, GES (Greedy Equivalence Search), and CAM (Causal Additive Models). The PC algorithm is a widely used method that employs conditional independence tests to infer causal relationships. GES, on the other hand, uses a greedy approach to search for causal structures. CAM combines causal inference with additive models to handle non-linear relationships. These algorithms often rely on statistical tests and graphical models to identify causal links. Python libraries such as DoWhy and CausalGraphicalModels implement these algorithms, enabling researchers to apply them to real-world data. By automating the discovery process, these tools make causal inference more accessible and scalable.

Inferring Causal Structures with Python

In Python, causal structure inference is facilitated by libraries such as DoWhy, CausalGraphicalModels, and PyMC3. These tools enable researchers to estimate causal relationships from data. The PC algorithm, a popular method, is implemented in CausalGraphicalModels to infer causal graphs by testing conditional independence. Bayesian approaches, like Bayesian Structural Equation Modeling (BSEM), are also used to model causal relationships. Visualization libraries such as NetworkX and Plotly help in rendering causal graphs for better understanding. Additionally, DoWhy provides an intuitive API for causal inference, allowing users to identify confounders and validate causal assumptions. These tools make it easier to translate theoretical causal models into practical implementations for real-world data analysis.

Applications of Causal Inference

Causal inference is widely applied in business analytics, public policy, and healthcare to make data-driven decisions, evaluate interventions, and understand cause-effect relationships in complex systems.

Causal Inference in Business Analytics

Causal inference is revolutionizing business analytics by enabling companies to uncover cause-effect relationships, driving strategic decisions. In A/B testing, it helps determine which product version yields better results. By applying causal methods to customer data, businesses can segment audiences based on causal behaviors, leading to personalized marketing strategies. Additionally, causal inference aids in assessing the true impact of marketing campaigns on sales and customer engagement. It also guides pricing strategies by analyzing how price changes causally affect demand. Moreover, causal insights optimize resource allocation by identifying the most impactful investments. With Python’s robust libraries, implementing these analyses is seamless, making causal inference an indispensable tool in modern business analytics.

Public Policy and Social Interventions

Causal inference plays a vital role in public policy and social interventions by enabling policymakers to evaluate the effectiveness of programs and interventions. For instance, it helps determine whether a policy, such as a job training program, causally increases employment rates or income levels. By identifying cause-effect relationships, policymakers can allocate resources more effectively and design interventions that address root causes rather than symptoms. In social interventions, causal methods are used to assess the impact of initiatives like education reforms or public health campaigns. Python libraries such as DoWhy and CausalInference provide tools to analyze and estimate causal effects, aiding in evidence-based decision-making for societal benefit.

Causal Inference in Healthcare and Medicine

Causal inference is transformative in healthcare and medicine, enabling researchers to determine the causal effects of treatments, drugs, or interventions on patient outcomes. It helps clinicians and policymakers make informed decisions about which treatments are most effective. For example, causal methods can identify whether a new drug causally reduces disease progression or if a surgical procedure improves long-term survival rates. In healthcare, observational data is often used due to the ethical and practical challenges of randomized controlled trials (RCTs). Python libraries like DoWhy and CausalInference provide robust tools for analyzing causal relationships in healthcare datasets, such as electronic health records (EHRs) or clinical trial data. These methods also support personalized medicine by identifying subgroups that benefit most from specific treatments, ultimately improving patient care and outcomes.

Resources for Learning

Explore books, online tutorials, and courses to master causal inference in Python. Utilize libraries like DoWhy and CausalInference for practical implementations and real-world applications.

Recommended Books on Causal Inference

Causal inference is a fundamental concept in data science, and several books provide in-depth insights into its principles and applications. “Causal Inference: The Mixtape” by Scott Cunningham offers an accessible introduction to causal reasoning, making it ideal for beginners. Another essential read is “Causal Inference in Statistics: A Primer” by Judea Pearl, Madelyn Glymour, and Nicholas P. Jewell, which provides a comprehensive understanding of causal frameworks. Additionally, “The Book of Why” by Judea Pearl and Dana Mackenzie explores the science of cause and effect in an engaging manner. These books are valuable resources for anyone looking to master causal inference, especially in the context of Python implementations and data analysis.

Online Tutorials and Courses

For learners seeking structured guidance, online tutorials and courses on causal inference are readily available. Platforms like Coursera, edX, and Udemy offer courses taught by experts, such as the University of Pennsylvania’s “Causal Inference” series. These courses cover foundational concepts, practical implementations, and real-world applications. Additionally, specialized resources like Kaggle tutorials provide hands-on experience with causal inference using Python. The DoWhy library’s official tutorials are another excellent resource for implementing causal inference in Python. Community-driven platforms like Reddit’s rstatistics and rdatascience often share valuable resources and discussions. These online resources cater to diverse learning styles and skill levels, ensuring comprehensive understanding and practical application of causal inference techniques.

Causal inference is a powerful tool for uncovering causal relationships in data science. By addressing confounders and biases, it bridges correlation and causation, enabling informed decision-making across industries.

Importance of Causal Inference in Modern Data Science

Causal inference is essential in modern data science as it enables researchers to move beyond mere correlations and uncover true causal relationships. This is critical for making informed decisions, predicting outcomes, and optimizing interventions. By addressing confounders and biases, causal methods provide a robust framework for understanding cause-and-effect dynamics in complex systems. In industries like healthcare, business, and public policy, causal inference helps evaluate the impact of treatments, policies, and interventions. It bridges the gap between data analysis and actionable insights, ensuring decisions are grounded in causal evidence rather than mere associations. As data-driven decision-making grows, causal inference becomes a cornerstone of modern analytics.

Future Trends in Causal Inference Research

Future trends in causal inference research are expected to focus on advancing methodologies to handle complex, high-dimensional data. Machine learning techniques will play a larger role in identifying causal relationships and addressing confounders. There will be greater emphasis on causal discovery algorithms that can uncover hidden causal structures in large datasets. Additionally, integrating causal inference with reinforcement learning and artificial intelligence will open new avenues for dynamic decision-making. Researchers are also likely to explore Bayesian approaches and non-parametric methods to improve robustness. The development of user-friendly Python libraries and tools will further democratize causal inference, enabling practitioners to apply these methods in real-world scenarios. These advancements promise to make causal inference more accessible and impactful across disciplines.